Overview

The Campus Cluster file system provides projects with a high performant storage space that is attached to a large compute service and the high-speed CARNE network. Use models include operational data storage and scratch space for analysis, mid-term storage for research data, a central storage space for compute and data sharing workflows for cross-campus collaboration, and more. The Campus Cluster file system offers scalable storage from 1 terabyte up to many petabytes available in dedicated project file sets available across a wide variety of protocols including Direct HPC compute access, Globus, SFTP, rsync, NFS, and Samba. The storage provides high-availability and high-reliability through the use of RAID technologies and multi-host presentations to all core servers from redundant controllers, to protect against controller and server failures.

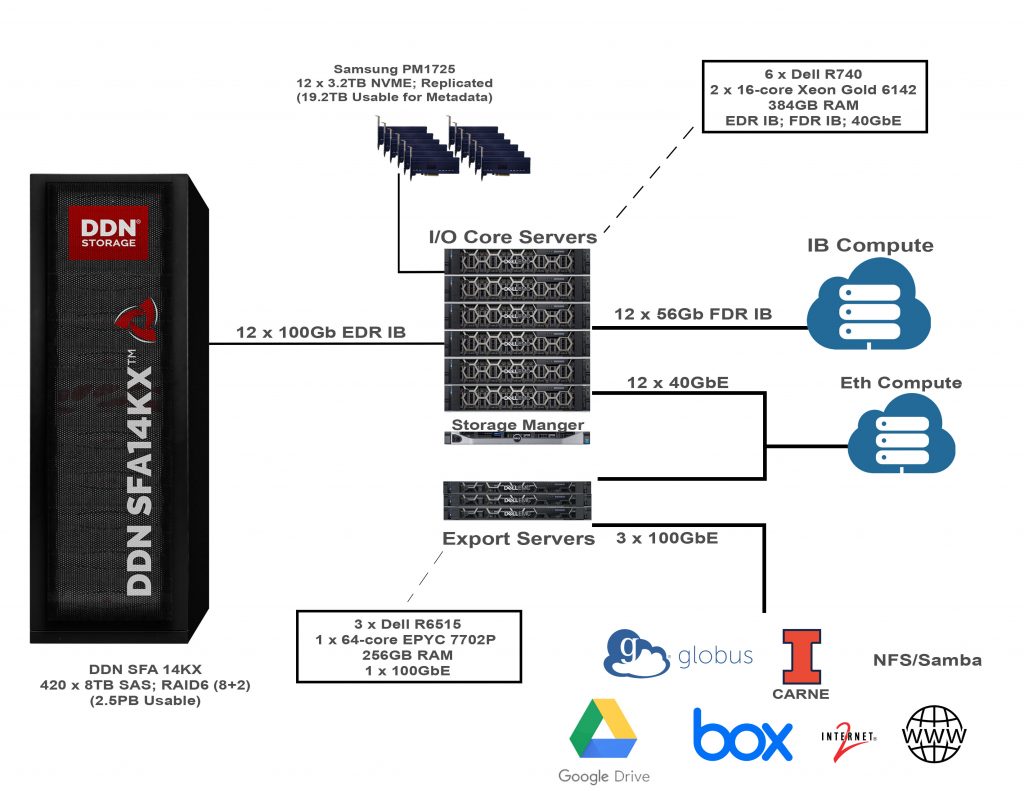

Hardware

The Campus Cluster file system hardware is comprised of 6 Dell R740 servers each with 32 cores/384GB memory, dual EDR,FDR, and 40GbE interfaces, and 2 Samsung PM1725 3.2TB NVME SSDs for metadata, these make up the core I/O servers. The system deploys a DDN SFA 14KX which is currently half populated with 420 8TB drives providing 2.5PB of usable space (capacity can grow to 5PB usable) and is connected via 12 x 100Gb EDR Infiniband for the bulk storage capacity. Also the system includes 3 Dell R6515 servers each with 64 cores/256GB memory and a 1 x 100GbE NIC dedicated to data access via Globus, NFS, SMB, rsync, scp, bbcp, and more.

Storage and Data Guide

You can learn all the details about how to manage your storage and data on the cluster in our storage and data guide.

Buy Storage

The Illinois Campus Cluster Program is now utilizing NCSA’s center-wide filesystem, Taiga, for project directories. You can request storage space through Taiga by following these instructions. You can learn more about the Taiga system in the Taiga documentation.

Disaster Recovery (DR) Service

This option is beneficial for projects with offsite disaster recovery storage needs for their primary research storage investment. You can learn more on our buy storage page.